Automated Performance Testing: Best Practices

Automated performance testing enables teams to reduce the risk of change, innovate faster, and have more confidence in the software they deploy to production. Automated performance tools enable teams to achieve these benefits by simulating user interactions and loads on an application, system, or service.

These tests then assess the target’s response times, throughput rates, and stability under different conditions without manual intervention. This type of testing aims to identify performance bottlenecks to ensure that a system meets specified performance criteria and delivers an optimal experience for end users.

Getting automated performance testing right requires a mix of tools, processes, and strategies. This article will take a deep dive into ten automated performance testing best practices that can help teams optimize their software quality.

Summary of key automated performance testing best practices

The table below summarizes the ten automated performance testing best practices this article will explore.

Ten best practices for great automated performance testing

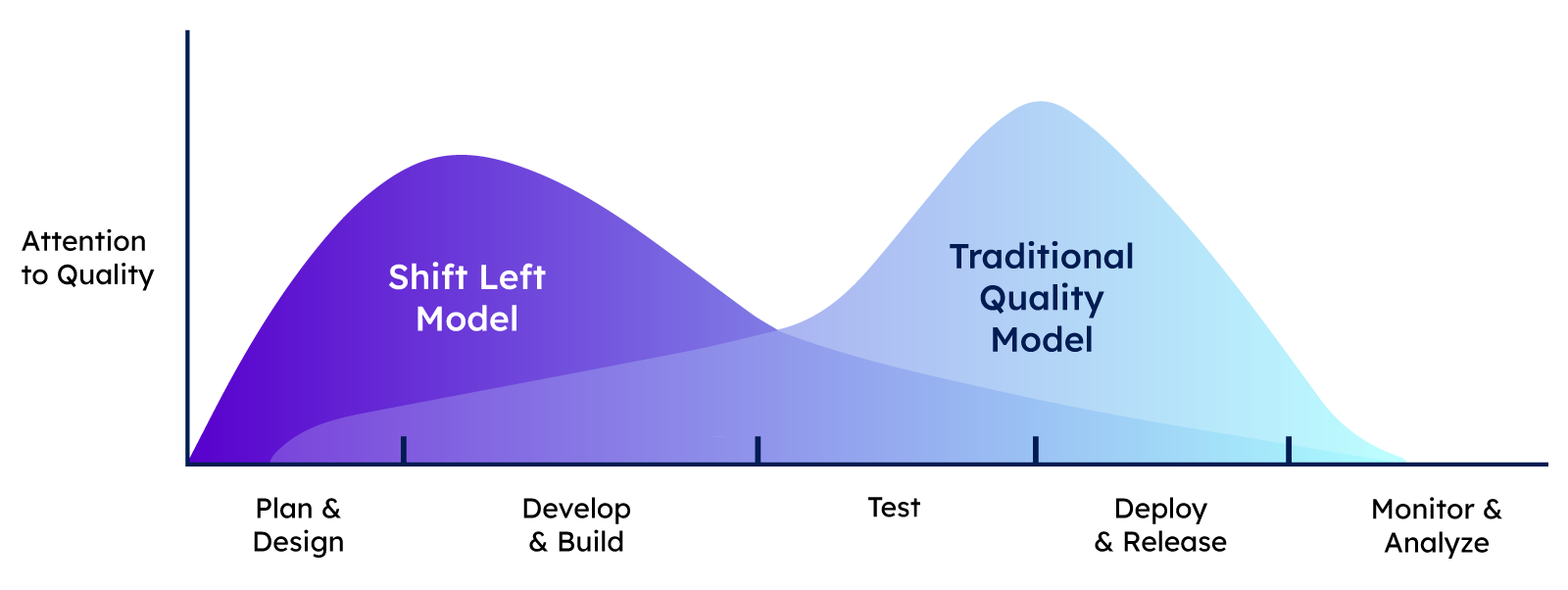

Historically, performance testing was often conducted near the end of a development cycle, when the application was nearing deployment to production. However, with the adoption of the "shift left" approach, performance testing is now being integrated earlier into the development lifecycle. This approach increases delivery velocity, reduces defect resolution cost, and can lead to better-engineered software designs.

The ten best practices below can help teams successfully shift automated performance testing left and improve their overall testing strategy.

Think like a user

The accuracy of performance testing is anchored in its authenticity. The resulting insights can be skewed or irrelevant if test cases are abstract or misaligned with real-world user behaviors. Crafting test scenarios that genuinely mirror user interactions ensures that the performance metrics obtained are rooted in reality.

For instance, if an e-commerce platform's real-world usage indicates that 70% of users employ the search function and filter results, then the test cases should prioritize and simulate this flow. Engineers ensure the identified performance metrics and bottlenecks matter by emulating genuine user activity. It also avoids the antipattern of optimizing for rare or non-existent conditions in actual usage.

Inject environment variables

Today's application ecosystems are sprawling and multifaceted. From cloud environments to on-premises servers, from development landscapes to staging areas and production deployments, the sheer diversity can be staggering. Such heterogeneity demands that performance test scripts be versatile.

The pivotal technique here is the management of environment variables. By parameterizing these variables, developers ensure that the same test script can be swiftly tailored to diverse environments. Imagine a scenario where the server endpoint changes between development and production; instead of rewriting the entire test, a simple change in the environment variable can repurpose the test appropriately.

To do so, avoid hard coding environment variables. Here is an example of what not to do:

Instead, inject the environment variables from outside the test script:

Another benefit of injecting environment variables is that it simplifies the code logic, which prevents defects. When hard-coded strings manage environments, engineers must add if statements to control the logic flow. For example, “if production, do this”, “if staging, do that”. Removing these statements and logic blocks prevents configuration defects by keeping the code as simple as possible.

Effectively managing the environment ensures that its performance can be consistently and accurately measured wherever the application resides.

Share test data between scripts

A key component of any test case is test data. However, as tests proliferate, managing this data can become challenging. One best practice that emerges as a solution is centralizing test data, ensuring consistent data is accessible across various test scripts.

Consistency in test data is essential for reliable comparisons. If one script tests the application under a specific data set and another uses a variant, the results can be misleading or inconsistent. Centralizing test data ensures that the underlying data remains consistent regardless of which script is executed.

Moreover, a centralized data repository fosters agility. As data requirements evolve or expand, changes can be made at a singular location, instantly benefiting all associated test scripts.

{{banner-1="/design/banners"}}

Update dependencies regularly

Maintaining up-to-date dependencies and packages is crucial for the efficacy of automated performance testing. Outdated packages can introduce inefficiencies or incompatibilities that slow the testing process, produce unreliable results, or even lead to security vulnerabilities. Regularly updating these components ensures your testing environment reflects the latest standards and avoids unnecessary technical debt. There can also be new features in updated packages that allow for cleaner and more maintainable code.

In addition, the constant evolution of software tools means that newer versions of dependencies often come with optimizations and bug fixes. By keeping packages current, you not only benefit from these improvements but also ensure the reliability of your test code.

Use test lifecycle management

Logically structuring code often leads to better design. Breaking down the performance testing process into stages can drastically enhance productivity. A typical performance test lifecycle can be visualized as a series of stages, such as initial setup, test execution, subsequent teardown, and result reporting.

Here is an example in pseudocode of a test lifecycle commonly seen in test suites:

Each of these stages has its significance. The setup ensures the environment and prerequisites are primed for the test. The execution stage executes the test scenarios. The teardown resets the environment, ensuring that residual data or configurations from the previous run do not taint subsequent tests. Finally, the reporting stage extracts insights and evaluates performance metrics.

Having this type of structured approach ensures repeatability and imbues clarity into the testing process. Moreover, engineers can limit redundancy by defining universal setup and teardown processes, ensuring a leaner, more efficient testing paradigm.

Write readable code

Readability in code is not just about aesthetics. It is a functional necessity. As teams scale up tests and they become more difficult to manage, readability becomes increasingly vital.

When a codebase is readable, it's immediately more accessible to team members. This accessibility ensures that other engineers can quickly engage with the tests and potentially offer optimizations or identify overlooked scenarios. Moreover, a readable codebase significantly reduces the onboarding time for new team members. Rather than grapple with cryptic functions or ambiguous logic flows, they can swiftly acquaint themselves with the testing suite and contribute effectively.

Furthermore, a transparent and logical code structure drastically reduces the chances of errors or oversights. When code is tangled and convoluted, latent defects are more likely, which could undermine the performance test results.

Prioritize simplicity

As performance testing becomes more intricate, the complexity of the test code can inadvertently increase, making it more challenging to decipher and analyze results. This complexity can introduce variables that make it harder to pinpoint the root causes of performance bottlenecks or anomalies. In worst-case scenarios, an overly complex test script might become a source of failure, confounding results and potentially leading to misguided optimization efforts.

It is therefore necessary to strike an appropriate balance between writing tests that adequately mimic real-world scenarios and keeping test code as simple as possible. Simplified code minimizes the chances of test code failures and aids in clearer interpretation of results. On the other hand, complex tests that do not add value can obfuscate genuine issues and hinder the main objective: optimizing performance based on actionable insights.

Establish clear goals

In performance testing, results are compared to expectations. These benchmarks are not arbitrary but are the yardsticks defining acceptable performance derived from setting goals. Astutely setting these benchmarks is crucial. Too lenient, and potential issues might be overlooked; too stringent, and developers might find themselves chasing inconsequential optimizations.

For example, if we wanted to set a threshold expectation:

The above describes the context of what we are testing and why. Compare the above test to the following, where the test is more vague and less descriptive:

A pragmatic approach is to ground these benchmarks in real-world expectations. For instance, if industry standards suggest that a specific transaction should be completed within two seconds, that becomes a meaningful benchmark. When tests consistently breach this threshold, it's a clear indicator that optimizations are warranted.

Shift testing efforts left

“Shifting left” refers to moving tasks, processes, or testing earlier in the development or project lifecycle. This practice is essential in testing. By executing tests early and frequently, developers ensure that changes — such as code modifications or environment updates — are immediately evaluated for performance implications. Running tests often also has the benefit of ensuring that they are usable and up to date.

Furthermore, by integrating performance tests early into the development process, potential issues are flagged at the earliest stages possible. Running tests as soon as possible also allows for more agility, for example, if a test environment goes down. This early detection reduces the cost of rectification and ensures that performance remains a focal point and not an afterthought as the application evolves.

Compare test result trends over time

While isolated test results offer value, the actual potency of performance testing is realized when outcomes are assessed over time. Trends and patterns often unveil insights that singular test runs might obscure.

For instance, if a particular module's response time has steadily increased over multiple test iterations, it might hint at a creeping inefficiency. Such progressive insights enable developers to address issues proactively before they escalate into tangible performance bottlenecks.

Storing test results meticulously and then employing tools to visualize trends can significantly augment the strategic value of performance testing, transitioning it from a reactive tool to a proactive strategy enhancer.

{{banner-2="/design/banners"}}

Conclusion

As software development evolves, performance testing matures along with it. Through automated performance testing, developers can simulate real-world user interactions and assess the performance of their software under varying conditions. This practice goes beyond merely identifying bottlenecks—it guarantees that systems meet designated criteria, ensuring an optimal user experience. The integration of "shift left" strategies brings performance testing earlier into the development lifecycle, optimizing not just the software but the entire development process.

To make the most of automated performance testing, certain best practices stand out. Clear, readable code ensures swift collaboration and minimizes errors. Crafting test cases that authentically mirror user interactions provides relevant and actionable insights. Proper management of diverse environments through parameterization enhances versatility. Structuring tests via lifecycle management, sharing data across scripts, setting realistic expectations, analyzing trends over time, and the consistent, early execution of tests all come together to create a robust and effective testing strategy.

Embracing these best practices isn't just about improving the efficiency of individual test runs. It's about instilling a culture of performance-oriented development, where every code change, every user interaction, and every system response is viewed through the lens of optimal performance. With the best practices outlined in this article, developers are well-equipped to rise to this challenge.